Protobuf Encoding

In this post, we summarize Google’s Protobuf, based on its official documentation. To give a high-level overview of how Protobuf works and different components it has, many details are skipped (and can be found in the original documentation).

Disclaimer: this is more like a personal study note. I try my best to ensure correctness, but errors are unavoidable.

Overview

Protobuf is an encoding language that can represent your data in a cross-platform, cross-language way and encode your data to bytes for network transformation. With Protobuf, you can (but not limit to) do the following:

- Describe your data (e.g., classes) in a cross-language way (in

.protofiles), by using theProtobuflanguage. - Generate language-specific definitions of your data (e.g., Go struct, or Java class) from

.protofiles, by using theProtobufcompiler and language-specific code generation extensions. - Generate data in one language and encode the data to bytes (based on the

.protofiles). Then decode those bytes to objects in another language. - You can also send the bytes to another machine via network and decode there.

By using Protobuf, you only need to describe your data once and automatically generate its definition in different languages. It also enables you to generate data in one language, encode your data, and decode it to objects in another language.

Protobuf mainly consists the following components:

- The

Protobuflanguage (and.protofiles): it defines how you can describe your structured data usingProtobuf, in.protofiles. Imagine how you define a struct using Go. These.protofiles will act as the contract for generating language-specific definitions and for encoding data to bytes (and vice versus). - Encoding/decoding methods: how Protobuf encodes your data to bytes and decodes bytes back.

Protobufcompiler: the main engine that encodes/decodes data and generates language-specific definitions.- Language-specific bindings: they provide support for different languages to work with Protobuf.

The Protobuf Language

In language like Go, you describe your data schema as a struct, by specifying the type (either predefined or defined by you or else) and name of each field in your data.

Similarly, in Protobuf a data schema is defined as a message. You also need to specify the type and name of each field in your message. Protobuf has a list of predefined types (e.g., string, int32, etc). And you can also nest a messge into another message. Fields can also be repeated (like an array), by specifying as repeated.

Field Numbers

The main difference in Protobuf compared to other languages is that each field has not one but two identifiers, a normal name AND a unique field number. The purpose of field numbers is to:

- Identify each field in Protobuf’s binary representation.

- Retrieve corresponding field names when decoding the binary representation, based on the

.protofiles.

To ensure backward and forward compatible, one field number should not be changed once assigned and only be used once even that field is deleted in the future. Also, due to how Protobuf encodes data (discussed below), lower field numbers (1-15) have less bytes (1 byte) and thus should be assigned to fields that appears frequently.

Notice that fields numbers neither imply an ordering in the binary representation, nor need to be consequent. Instead, they only acts as a mapping to field names.

Now, suppose we have a Go struct that defines a search request:

type SearchRequest struct {

Query string

PageNumber int32

ResultPerPage int32

}

We can describe the same data using Protobuf as

message SearchRequest {

string query = 1;

int32 page_number = 2;

int32 result_per_page = 3;

}

Notice that, if we use Protobuf, the corresponding Go definition can be generated automatically from the .proto file by Protobuf compiler and Protobuf Go binding.

How to write a complete .proto for your data is a long and complicated topic. The best reference is Protobuf’s official language guide.

Encoding

Now suppose we’ve described our data schema in a .proto file and produced some read data (say, 3 SearchRequest objects), how will Protobuf convert our SearchRequest objects into bytes, which can be converted back into objects, possibly in another language? That’s defined by the encoding method Protobuf used.

Varints and Base128

First we introduce some basic comcepts that Protobuf uses, such as base 128 varints.

Varints are a family of methods that serializing one integer using one or more bytes. Usually smaller integers take less bytes. For each byte, some bits are used for special purpose and the rest are used to represent (part of) the real data. For example, Base128 uses the most significant bit (msb) to indicate if there are still bytes to come (1: more bytes, 0: the last byte), while the rest 7 bits represent the real data.

So to encode an integer using Base128, we first convert it into binary, group the binary per 7 bits, reverse groups (least significant group first), set msp to 1 for all but the last group and 0 for the last group. Take 300 as an example:

0000010 0101100 # 7 bits per group

0101100 0000010 # reverse all groups

10101100 00000010 # add msb bits

ac 02 # encoded bytes in hex

Now to decode the bytes, we just need to reverse the steps:

ac 02 # bytes in hex

10101100 00000010 # bytes in bin

0101100 0000010 # remove msb bits

0000010 0101100 # reverse all groups

10 0101100 # real data in bin

300

Why we need to reverse those 7-bit groups? The benefit is that during decoding, we can start accumulating the data from the first group. If we don’t reverse, we will not know in which position each bit is until we approach the last group. By reversing, we know that the first group will be bit 0-7, the second be bit 8-14, and so on.

Base128 is the method used by Protobuf to encode varint-typed data value

Encoding Messages

Now let’d see how Protobuf encode a complete message, along with real data, to bytes. First Protobuf treats each field in a message as a key-value pair where the key contains metadata of the field including field number and wire type and the value is just the real data of the field. Some wire types also have extra metadata (e.g. length of the value) between the key and value.

The key and value is encoded and concatenated, and the complete encoded message is just the concatenation of all encoded key-value pairs.

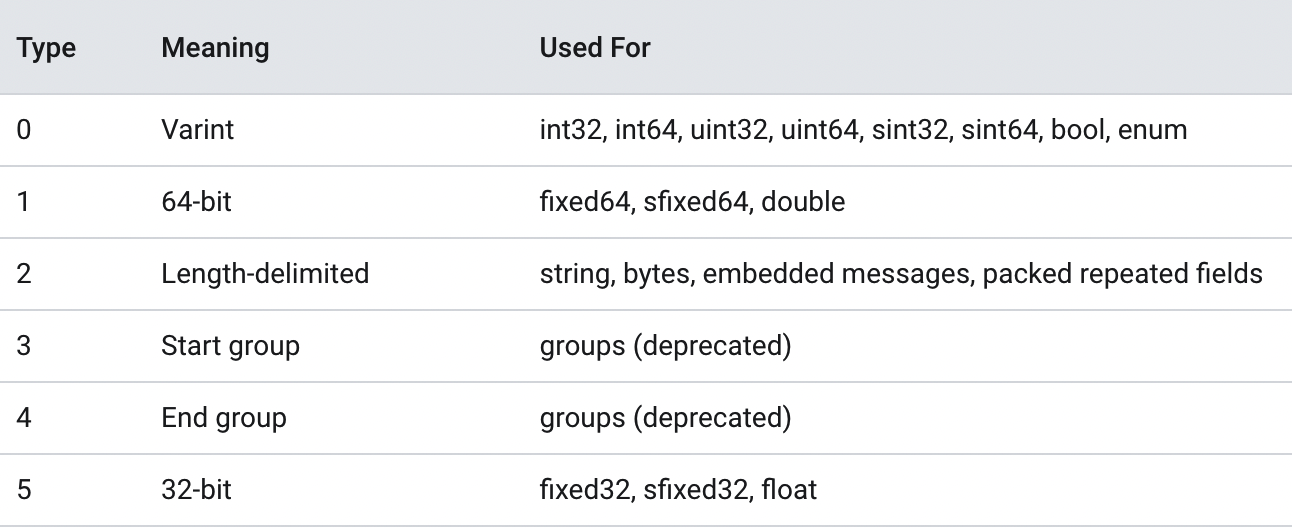

Protobuf categorizes fields into different wire types, each with a type number. Different wire types might means different value encoding methods, as discussed later.

Protobuf encodes each key as a variant (remember Base128), by using the value of (field_number << 3) | wire_type (remember that, to use Base128, first we need an integer).

Values are encoded based on their wire types. The most noticable two types are 0:Varint and 2:Length-delimited. Varint just means the value is encoded by Base128. For Length-delimited, we insert a varint-encoded length metadata between the key and value, meaning the following length of bytes are the encoded value. In the case of string, it’s just a UTF8 string bytes, whereas in the case of message, it’s the same as we described in this section.

For other wire types, their values have a fixed number of bits. Thus, Protobuf can encode and decode their values directly.

How Protobuf distinguish between string and embedded message, since both have the same wire type but use different methods to encode values: when Protobuf decodes the bytes, it still has the access to the .proto file, from where it can know the concrete field type.

Example

Now let’s go over an example. Suppose we have a SearchRequest object (written in Go, Protobuf definition is given above):

req := SearchRequest{Query:"abc", PageNumber:300, ResultPerPage: 5}

Let’s first encode field query whose field numer is 1 and wire type is 2:

(0001 << 3) | 010 # key calculation

1010 # key in bin

0001010 # group per 7 bits

00001010 # add msb (1 group, no reverse needed)

0c # key encoding

Given wire type 2, we need a varint-encoded length which is 3:

0011 # 3

00000011 # binary

03

Its value encoding will be the UTF of string abc, which is 61 62 63. So for field query key-value pair, we will have 0c 03 61 62 63.

Let’s quickly go through the steps for field page_number:

# key

(0010 << 3) | 000

10000

00010000

10

# value

300

ac 02

# complete key-value pair

10 ac 02

and field result_per_page:

# key

(0011 << 3) | 000

11000

00011000

18

# value

5

0101

05

# complete key-value pair

18 05

So the complete encoded bytes for the data we give will be 0c 03 61 62 63 10 ac 02 18 05.

Protobuf Compiler and Language Binding

In the last two parts we covered two of the main designs behind Protobuf: how to describe your schema and encode/decode your data. However, it’s the Protobuf compiler that makes all these functionalities work as expected and the various language bindings that provide Protobuf runtime support and enable Porbobuf to work seamlessly with specific programming languages.

Since the compiler is too low-level and the bindings are mainly language-specific implementation details, we will not cover the two here.

Extension

With Protobuf, you can now declare your data schema in .proto files and encode your language-specific objects to bytes. However, those byte data is still in your local machines. What if you want to use these data in a cluster or on different machines? Then you need to figure out a way in which you can send/receive these bytes.

You can definitely write your own web sockets to pass the Protobuf-encoded bytes and decode them to objects using the same .proto files. There are also open-source solutions such as gRPC that can help you do this and more.

Think that

Protobufitself is just an encoding system. Based on the Protobuf encoding,gRPCprovides support for remote communications and remote procedure calls, etc.

Remote Procedure Calls (or RPCs) enable a program to call methods/functions in another program which might be running in other machines. Different languages usually have their own RPC implementations that let you write RPC programs. However, the limitation is that both the local program (caller) and the remote program (callee) have to be written in the same languages.

Similar to Protobuf with which you can describe your data in a language-neutral way, gRPC lets you write RPC programs where the local program and remnote program can be written in different languages. By using gRPC, you delegate the RPC logics and network transformations to the gRPC runtime.

The role of Protobuf has in gRPC is that, you can write not only data schema (message), but also RPC call schemas (service). Then with the service descriptions in the .proto files, gPRC can serilize you RPC calls in local, pass and deserlize them in remote machines. Then the RPC calls are executed by the remote program and the results get returned back to the local program.

updated_at 21-11-2021I haven’t had a chance to learn more about

gRPC, so this section is only a brief overview. Probably I will write a blog aboutgRPCas well in the future!